The Internet and the Web

Indexing and ranking of web pages

Real-life complications

Sentiments of humanity

Further reading and viewing

One of the most surprising things about technological development is that it is so hard to predict! Who in his right mind would have thought in the summer of 1957 that men would walk on the Moon in less than 12 years?

When, as a schoolboy in the 1950s, I tried to imagine the future, my expectations were very different from what it turned out to be. A naive extrapolation of the previous 50 years suggested ever faster means of transportation: supersonic flight, interplanetary travel, and new forms of mass transportation, such as "slidewalks" and personal aircraft; transformation of cities beyond imagination; robots perhaps; walls turned into TV screens... And a high probability of thermonuclear war.

Yet, today in 2010 when I look around, the surprises lie in a quite different direction. The cities, the streets, the railways, even air traffic all look remarkably unchanged. Of course, there is more traffic, larger cities, automated factories etc, but still... My grandfather, who was 13 when the Wright brothers demonstrated controlled heavier-than-air flight, and 79 when Apollo-11 landed on the moon, would probably have been less impressed with the rate of technological change since then, had he witnessed the present time. - I have his diaries 1917-1969, where he notes when he buys his first car, his first radio, makes his one and only air trip, gets his first TV set, records the first lunar landing etc. Unfortunately, he does not share his thoughts on these events.

Instead, what has impacted our lives in ways very few of us could foresee is Information Technology; in particular computers and telecommunications which have led to the Internet. The Internet started as a defense research project in the U.S. in the 1970s, seeking to allow computers to communicate with each other. The idea was that a distributed network of computers would be less vulnerable to nuclear attack. Scientists and universities started using the technology from around 1980, and its use has been growing exponentially ever since.

Now, one of the characteristics of exponential growth is that it can go on for a long time before it reaches a critical threshold and captures general attention, just as the sudden appearance of an algal mat in a pond may surprise you, although it has in fact been developing for some time. Its sudden appearance on the "radar screen" of a Swedish Minister of Communications was famously reported in 1996: "Everybody talks about the Internet now, but this may be temporary." Actually, traffic on the Internet initially doubled every year since its inception. Although the rate of growth may be slowing, two thirds of the world's population now has access to the Internet.

My own use of the Internet started in 1991, when I led a multinational consortium performing a technical study for ESA. We used a service called Omnet to exchange messages and documents electronically. (It is already becoming difficult to recall how such work was done in the pre-Internet age!) Omnet was designed to help scientists keep up with developments in their own fields.

Once personal computers became affordable, there were two key developments that brought the Internet "to the masses". The first was the creation of the "World Wide Web" in 1991 together with the "Hypertext Markup Language" (HTML) at CERN and the first web browser (Mosaic) shortly thereafter. This is what made it possible to "surf the Web", i.e. to follow links from one document to the next, possibly located on a server in a different country or continent. All you needed in order to access the collective knowledge of mankind was a personal computer and a telephone connection! A whole universe opened up to be explored. To "surf the Web" became a new way of finding knowledge, ideas and friends. Not only was it informative, it was downright addictive!

But one crucial element was missing. In the absence of a directory, each user was forced to build and save his own list of useful Web addresses. (The situation was reminiscent of the old Soviet Union, where telephone directories were hard to come by, as a matter of policy). Thus, the second revolutionary development was the creation of "search engines", i. e. computer programs that would find those documents on the web that were relevant to you in response to your queries.

As a first step, "web crawlers" were developed, which systematically followed every link, and created a listing of all pages, on the Web. These could then be indexed and searched according to content just like an encyclopedia. At first, "content" meant the title and the keywords submitted with each page. Later, in 1995, the complete text of all accessible web pages was indexed (the AltaVista search engine).

But even this was not very satisfactory. Even a restricted search could return hundreds of hits which were listed in a more or less random order. It was very time consuming to sift the relevant pages from the chaff (as I experienced first hand in 1996, when I used the Web to trace and find a relative who had been out of contact for more than 15 years). The problem grew as the data bases expanded. What was needed was a good way to rank pages, so that those most likely to meet your requirements landed at the top of the list.

The problem persisted and grew until a company named Google was formed in 1998. Its solution rapidly gained acceptance. By 2000 Google had become the world's most popular search engine and had even become a household word: to "google" someone.

Google's solution was devised in 1996 by two bright graduate students at Stanford University, Larry Page and Sergey Brin. The basic idea was to include the number and quality of pages linking to a given page in that page's ranking, in an iterative process. The theory was that many people would link to a useful page, while nobody would link to a useless page.

When you hear this, you have to ask yourself, along with thousands of other search engine users: "Now why in the world didn't I think of that and start a company that would make me a billionaire?" Well, I think most people would be instinctively skeptical of a scheme that involves mapping the cross-connections between literally hundreds of millions of documents. You have to be an expert to evaluate the technical implications. But you also need a youthful optimism to even consider tackling the obstacles that are bound to arise, many of which are quite hard to foresee.

Now when I enter a search word in Google, it returns a list of the most promising pages within a fraction of a second. For instance, I just googled "Messi". Google reported that there were about 24 million results and returned a list in 90 milliseconds. How is this possible? (Sadly, you will not get a wholly satisfactory answer from me.)

Indexing and ranking of web pages

The key to rapid search is to do most of the work in advance, so that only a look-up in a table is required when you actually enter your query. The information you will require has already been pre-processed long before you need it, just as an encyclopedia is compiled in advance and ready to be consulted at a moment's notice.

The collection of all the world's web pages is done automatically by "crawlers" in a never-ending process. These are sophisticated programs that find and download copies of all the pages on the web by following all links between web pages. They are guided by instructions to revisit highly ranked pages frequently and lower-ranked pages less frequently. A popular on-line newspaper will be visited many times a day, while a more modest site (such as this one) will receive a visit only every few weeks. A deep search of all pages on the Web is carried out even less frequently. Crawler programs ignore web pages that carry the proper blocking instructions in their HTML source code. They also take care not to spend too much time at any given site, since that might overload the site and affect its response time.

Once the "raw" web pages have been downloaded, the analysis takes place. A number of parameters are extracted for each page, and are associated with that page. Such information will typically include the page's title and keywords, its language, the incoming and outgoing links for each page, the font size of the text elements, the date when the page was last visited etc. Each word of the text is indexed, and its frequency in the text is noted.

It does not stop there. The structure of the text is analysed so that the proximity of several search words in the text is recorded. For instance, if you google for 'white' and 'house', you expect to find the U.S. president's White House at the top of the list, so it is not sufficient to identify just those pages with both 'white' and 'house' somewhere in the text.

As a result of the analysis, all the data needed for page ranking become available. As noted above, a key feature of the ranking algorithm ("recipe") is that the number and ranks of pages linking to the page to be ranked figure prominently, but over the years many factors have been added. According to rumor some 200 factors influence Google's ranking, but the detailed algorithm is a closely guarded secret, partly to stifle the competition, but just as importantly, to defeat cheaters (see next section).

Even though all of this processing is done "off-line", i. e. divorced from the actual query, it still presents an enormous technical challenge. It requires very large computer resources and very efficient processing algorithms to complete the work in days or hours rather than centuries or millenia.

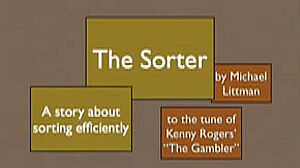

3 min 33 secs.As remarkable as this is, what I find really impressive is that Google can respond so quickly in real time to a query — literally in the blink of an eye. And to top it off: it is reported to now (2024) handle some 100,000 queries per second. I am not going to pretend to know how it is done except in the most general terms. (Ref. 2 below gives a good description of the conceptual architecture of Google's search engine.) An important part of the explanation has got to be that disk access is kept to a minimum, and most, if not all, of the index is kept in primary memory (what used to be called "core memory" in the days of ferrite beads...) Of course, the search algorithms have been massaged to yield maximum speed. (A whole volume of Donald Knuth's "The Art of Computer Programming" is devoted to sorting and searching.) Moreover, the work is highly distributed. Literally hundreds of computers may be involved in the search.

Now, if somebody could explain why my last PC needed at least half a minute to start up, even though I regularly scanned it for unwelcome cookies and intruders...

In theory there is no difference between theory and practice. In practice there is. (Yogi Berra)

In private life, it is becoming increasingly difficult to imagine life without an efficient search engine for tasks such as finding the opening hours of a public library, or finding a bus schedule, or looking up a phone number, or finding an address on a map, or helping the kids with their schoolwork. But the impact that really counts has been the drastically decreasing cost of many business transactions: identifying a supplier or partner or allowing a customer to find you, often eliminating "the middle man". For a small business, gaining a high Google ranking for its web site can be a matter of survival. Imagine that you are running a bicycle repair shop in a mid-sized town. When people google for help repairing their bikes, you definitely want your shop to appear high on the first page of Google suggestions and not as say number 47.

Therefore people are very anxious to design web pages in a way that will appeal to Google's search algorithm. Google, on the other hand, is critically dependent on being seen as impartial and fair in its ranking. (You can pay to get to the top of the list for certain search terms, but this is clearly marked as a paid advertisement.) This has led to a cat-and-mouse game, where designers try to outguess their competitors on how Google rates pages, while Google continually tries to improve its algorithm so that various "tricks" will not distort the ranking process.

Google's periodic modifications used to be called the "Google dance", when rankings could be drastically affected. I believe that modifications are now being implemented more gradually and cautiously.

Some of the tricks ("spamdexing") that have been used to try to fool Google and other search engines are:

- Cloaking, i. e. using redirects or programming to let people and Google believe that the content is different from what is presented to the user.

- Keyword stuffing or inserting keywords that do not relate to the content of the site. Sometimes a long list of terms that have nothing to do with content.

- Presenting white text on white background. Seen by Google, invisible to the user.

- Copying content from elsewhere to improve the ranking of a page, perhaps changing a few words here and there.

- Exchanging links with suspicious sites to improve your in-going link count.

Such attempts carry a high probability of being detected and getting your site blacklisted. Remember, there is no redress. Google does not respond to complaints about ranking or being totally left out. But you will be OK if you follow Google's guidelines.

I may myself have been guilty of a transgression: in a few places on my site I have imported text that I wanted to comment upon, in the form of an image of the text rather than the text itself, so as to avoid a possible blacklisting for plagiarizing content. With the progress made in optical text recognition, I may have jumped out of the ashes and into the fire...

Of course, page authors trying to influence their rating is not the only problem faced by the designer of a search engine. There are the critical trade-offs to be made between quality and speed and cost. They constantly change with technological progress (Moore's Law etc.), the size of data bases, the composition of the user community, and what the competitors are doing.

There is the difficulty of understanding what the user is looking for. And there are many languages (and computer languages). Many words have double meanings. Many people carry the same names. What is topical changes with time and location, but finding the user's location may violate his integrity. In addition, the heterogeneity of the Web makes it difficult to assess the quality and probable usefulness of a page, especially with the growing importance of images, video and audio.

Users are looking for content, but the purpose of most web sites is to make money, so delivering content of high value to the user is not necessarily the top priority. The same goes for most search engines: as their primary purpose is to make money, their designers face a difficult choice between satisfying the average user and favoring commercial sites, which are more likely to generate revenue. Generally, search engines pick up more information about the users than those would be comfortable with. "Personalizing" the search might antagonize the user by divulging how much the search engine "knows" about him or her. (Personally, I am even more uncomfortable with the information that credit card companies collect.)

Then there are malicious sites trying to infect computers with viruses, trojans, pop-up advertisements, or to clandestinely hijack them. They should be blocked by your firewall and anti-virus program, but they constitute a challenge to search engines as well.

Another issue is "dead links". When should a search engine consider a page truly dead. Perhaps the server in question is just off-line for maintenance? Some pages are dynamic — their content changes frequently. How do you assess their quality? Do you gauge them frequently or do you just sample them occasionally?

The user interface is more important than you might think. A cluttered search page, perhaps loaded with advertisements, will annoy most users. And it should be tolerant of misspellings.

The search engine designer also needs to measure the impact of small changes to his algorithm. This is not an easy task.

In short, developing a superior search engine is a much more complex undertaking than the pioneers could reasonably have foreseen. There are many issues to be confronted that a purely theoretical approach would not have uncovered. Fortunately, technological progress has been rapid enough to permit addressing most of these problems effectively.

The section title is inspired by a phrase I found in a United Nations document: "Prompted by sentiments of humanity..." To me it sounds a little sinister, as if those sentiments were the exception rather than the rule among UN diplomats...

It would appear that the founders of Google are idealists at heart, or at least that they started out that way. I very much doubt that they had aspirations of becoming mega-tycoons when they started their research at Stanford University, and their decision to start the company seems to have been influenced by complaints that they were using up most of the University's computer resources. They had only vague ideas of how they were going to make money, and for some time they resisted the idea of accepting advertisers on Google, all in line with the spirit of sharing that the Web's pioneers had brought to the Internet. They tried hard to make Google a special kind of company, with a campus atmosphere, an informal dress code, liberal work rules, etc. Engineers are allowed to devote 20% of their time to projects of their choosing.

Yet, with their growing power and wealth, many people have begun to distrust them. There have been questions about how issues of intellectual property rights (Google Books) and personal integrity (Google Earth and Google Street View) are handled, and many disapprove of their tacit acceptance of Chinese censorship. Above all, there is the potential for a massive invasion of privacy. And small business owners, in particular, are critically dependent on Google to ensure "a level playing field", as noted above.

Google is well aware of the potential for misuse of its power. As early as 2001, it made the phrase "Don't be evil" a central tenet of its philosophy. It also spends considerable sums on philanthropy. Of course, these may be token gestures shrewdly calculated to win sympathy for the company, but I am inclined to give them credit for being sincere, at least when it comes to the founders of the company.

1. A skillfully produced video about Google from its beginnings right up to its battles in 2010 with the likes of Apple, Microsoft, and Facebook. Very interesting. 48 min. - "This video is no longer available due to a copyright claim by Bloomberg Finance L.P."

2. The Anatomy of a Large-Scale Hypertextual Web Search Engine, Sergey Brin and Lawrence Page, 1998. — A clear and well-written account of the background and initial design of the Google search engine by its creators.

3. Challenges in Building Large-Scale Information Retrieval Systems, Jeffrey Dean, 2009. — A video overview of the development of Google's search engine over the last decade, especially infrastructure, by a senior Google engineer. 65 min.

4. The Real World Web Search Problem, Eric Glover, 2007. — A video lecture on the difference between theory and practice in web search. It runs for 2 hours, but the accompanying index and slides make it easy to find the most interesting parts.